On Friday, September 14th, I defended my PhD thesis. I have always had a tendency to anxious and easily agitated. I wanted to see how my body reacts to the tense moments –almost 4 hours– of delivering a presentation and then answering questions of very smart people. So, equipped with a FitBit Pulse 2, I monitored my heart rate during my thesis defense.

Before I describe the correlations between the activities and heart rates, in case you are wondering, I passed the exam!

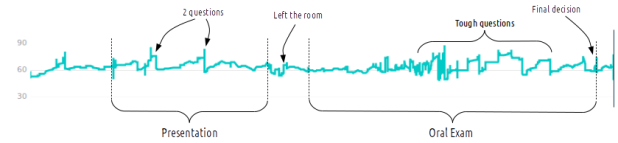

The figure shows the variation of beats per minute (bpm) over time. The y-axis correspond to bpm, and the x-axis to time. The readings start at 8:20 a.m.

My day started at 5 a.m. I have a very strict nutrition schedule, and I had to wake up to have my breakfast: eggs and oatmeal, as I didn’t want to feel heavy later. Then, I called my mom, as I usually do, and by 7 am I was heading to the University. Once I got to the lab, I went to the room where the thesis defense was scheduled. I set up the connection between my computer and media equipment, and put a few pastries I brought for the audience.

At 8:30 a.m. I was starting to feel nervous, I was only 30 minutes away from the public seminar. Everything was ready, and in my head I was repeating the first words of my presentation. My supervisor asked me to install Skype because some evaluators will be joining a Skype conversation. My heart beat went up a little beat, because I didn’t know if there was an installer for Ubuntu. Obviously, I could install it without problems, but I was nervous.

The presentation started at 9 a.m. I was nervous, but not too much, because I practiced the delivery of my presentation several times. During the presentation, I was interrupted twice, because some attendants had questions. You can see the spikes in beats per minute when I got those two questions.

After the presentation, I relaxed a little bit. I went to the washroom, and then I ate a cookie so I can survive the next two hours. When I went back into the room, the exam chair told me I had to leave the room. Since I was nervous, my heart rate went up temporarily.

The exam started around 10:10 a.m., and I felt confident in the first questions. Then, the questions became tougher and tougher. I felt I had fever, and I am sure the color of my face was red intense. However, I could answer the questions. I don’t remember much, though. I was just too nervous.

Then the chair asked to step out the room while the evaluators deliberate. After a few minutes, I was asked to enter the room. One of the evaluators started a sentence with “I regret to tell you…” And that is the last spike in the heart beat figure. A mini-heart attack. But then he said, “… passed the exam, congratulations”.

During the whole process, the average beats per minute was about 62, and the highest was around 88. Considering I was just standing or seated, the variation in heart rate seems significant. And although I know the instrument I used to measure the heart beats per minute is not accurate, I did it just for fun. The same reason I did a PhD, just for fun.